This article shows step by step how to setup all the cloud serverless components to store the Split Impressions data in a cloud database and allow downloading on-demand Impression data in a csv file format.

Split Admin site provide a webhook integration option to send the Impression data as they are injected into Split cloud, we will use the webhook to extract Impression data.

We will also use AWS API Gateway as the Webhook service, the API Gateway will forward the post request to AWS Lambda, which will extract the Impression information and store it in a AWS Postgresql database.

We can then use Python script to extract data from the PostgreSQL database with multiple combination of conditions for User key, treatment, feature flag name, timestamp and rule.

Here are the steps to setup the integration:

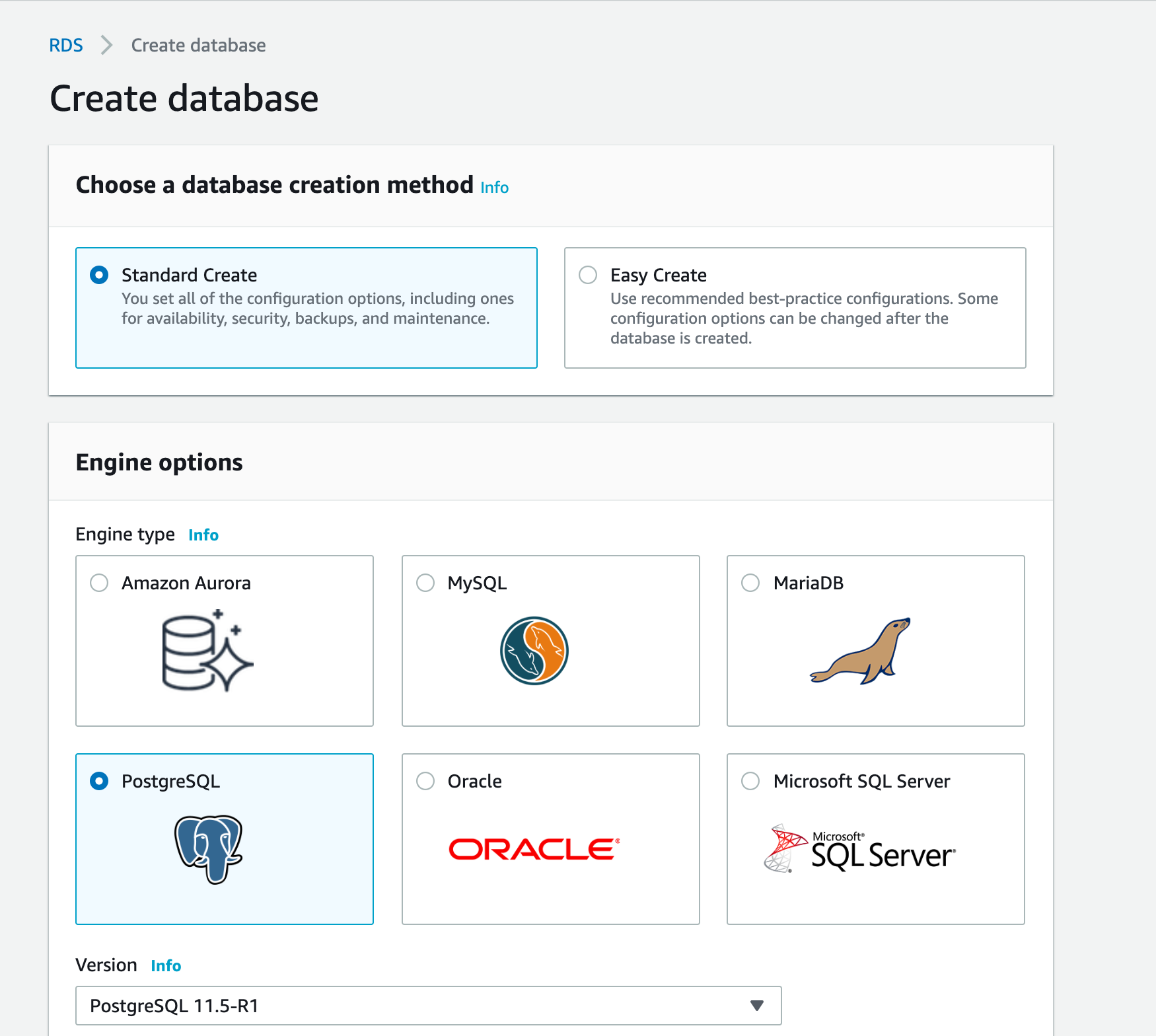

- First step is to create our cloud database instance, login to AWS and select Services->RDS.

- Click on PostgreSQL option, leave the latest version pre-selected.

- Decide which template you like to use based on the expected amount of Impressions that will be flowing in your database, checkout the JSON structure for each impression here, consult with a Database Administrator if needed. In our example we selected the free tier as this is an example service.

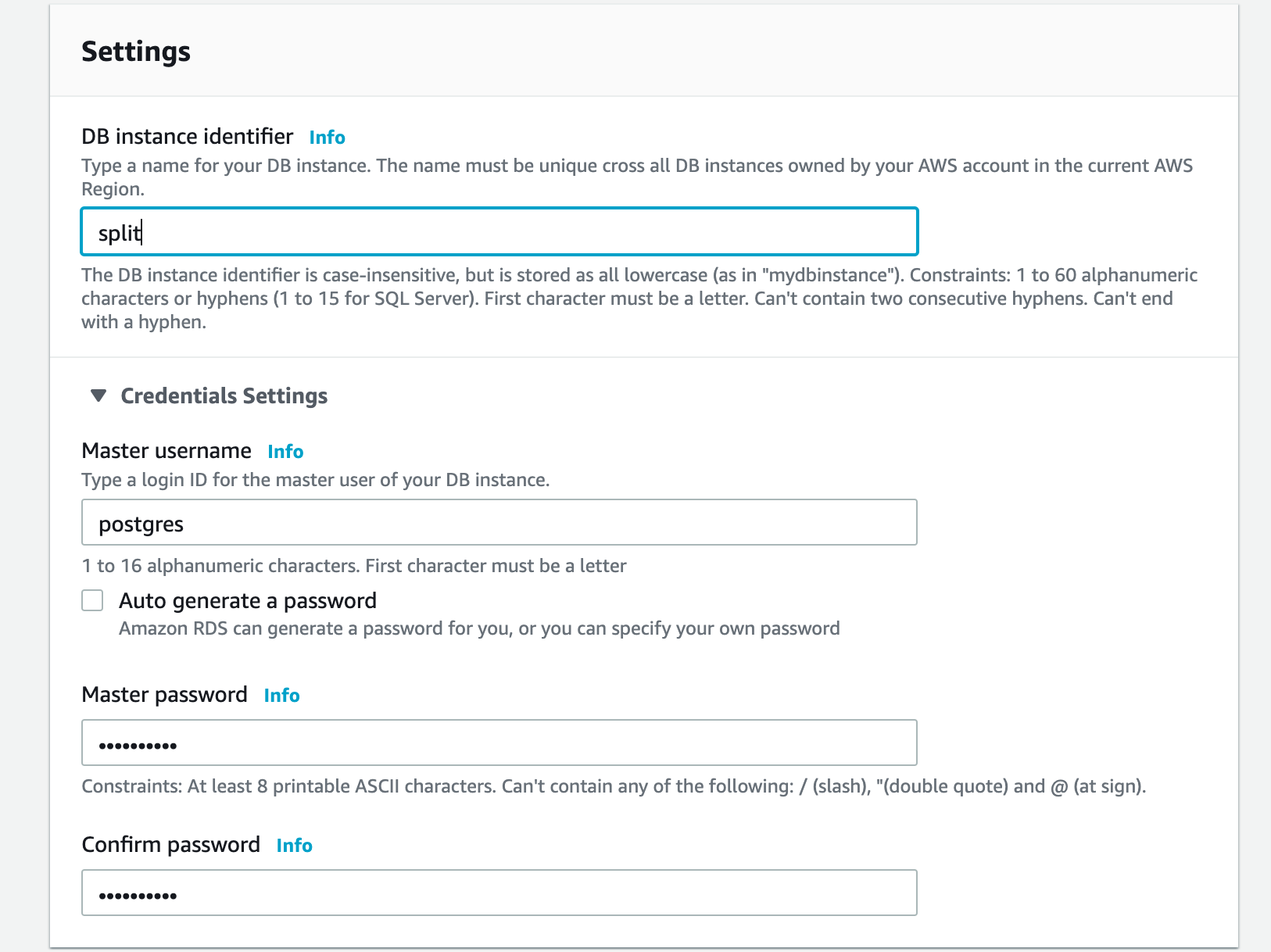

- Set the database name (in our example it is split) and the postgres user password.

- Select the DB instance size and amount of storage assigned to it, and click Create Database.

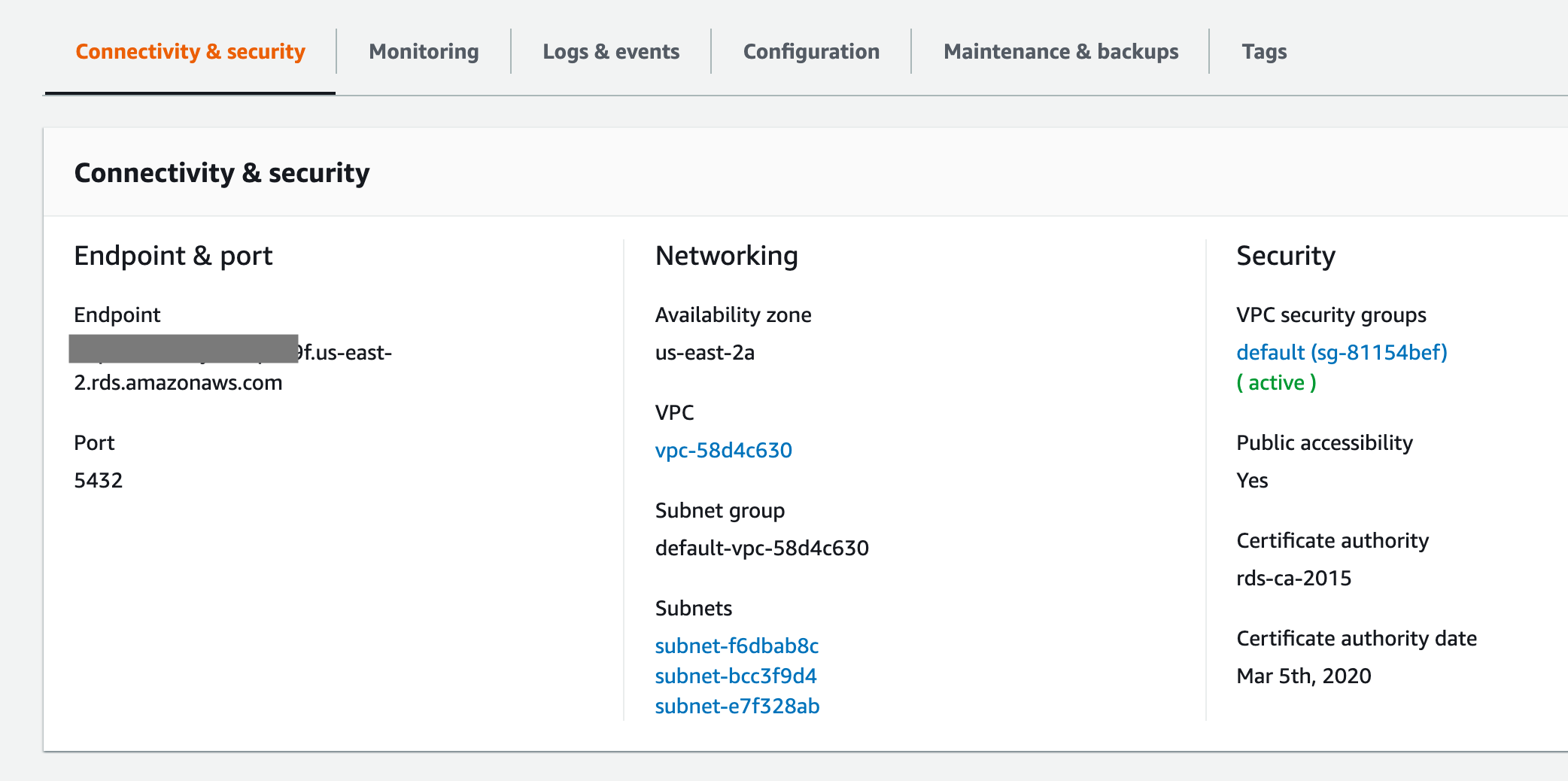

- Once the database instance is created, click on it, which will open the Summary section.

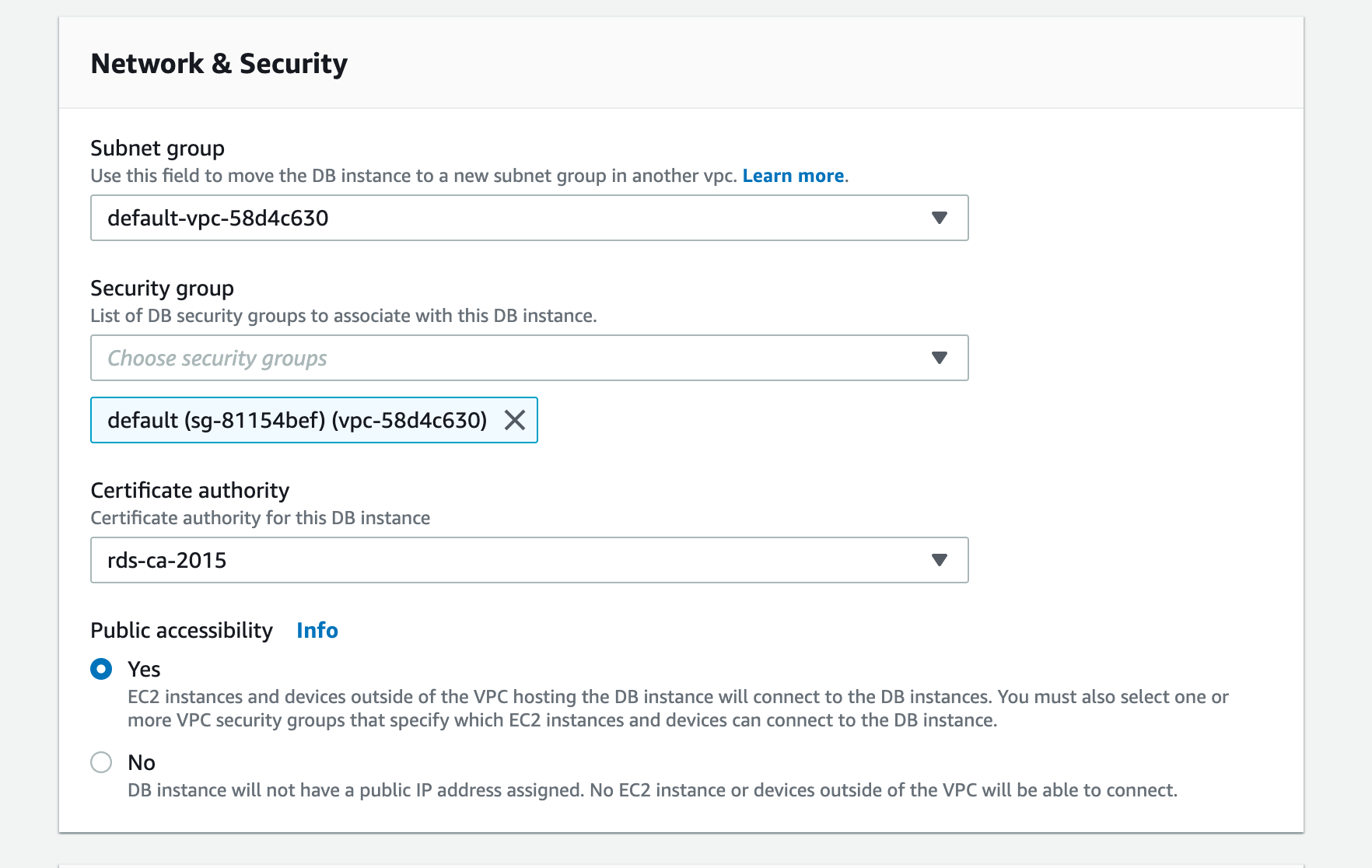

- Under the first tab Connectivity & security, change the Public accessibility option to Yes.

- click on the Modify button and set this option to Yes.

- Back to the Connectivity & security tab, copy the Endpoint. Your database is now ready to be used.

- We now need to create the Impressions table in our database, we do need to install postgresql client in a desktop, for information on how to install it, please check this link. Once its installed, connect to your database using this command line:

$ psql -h ***************.us-east-2.rds.amazonaws.com --username=postgresPassword for user postgres: *********

psql (11.1, server 11.5)

SSL connection (protocol: TLSv1.2, cipher: ECDHE-RSA-AES256-GCM-SHA384, bits: 256, compression: off)

Type "help" for help.

postgres=> - Switch to split database and run the SQL below to create the table:

postgres=> \c split

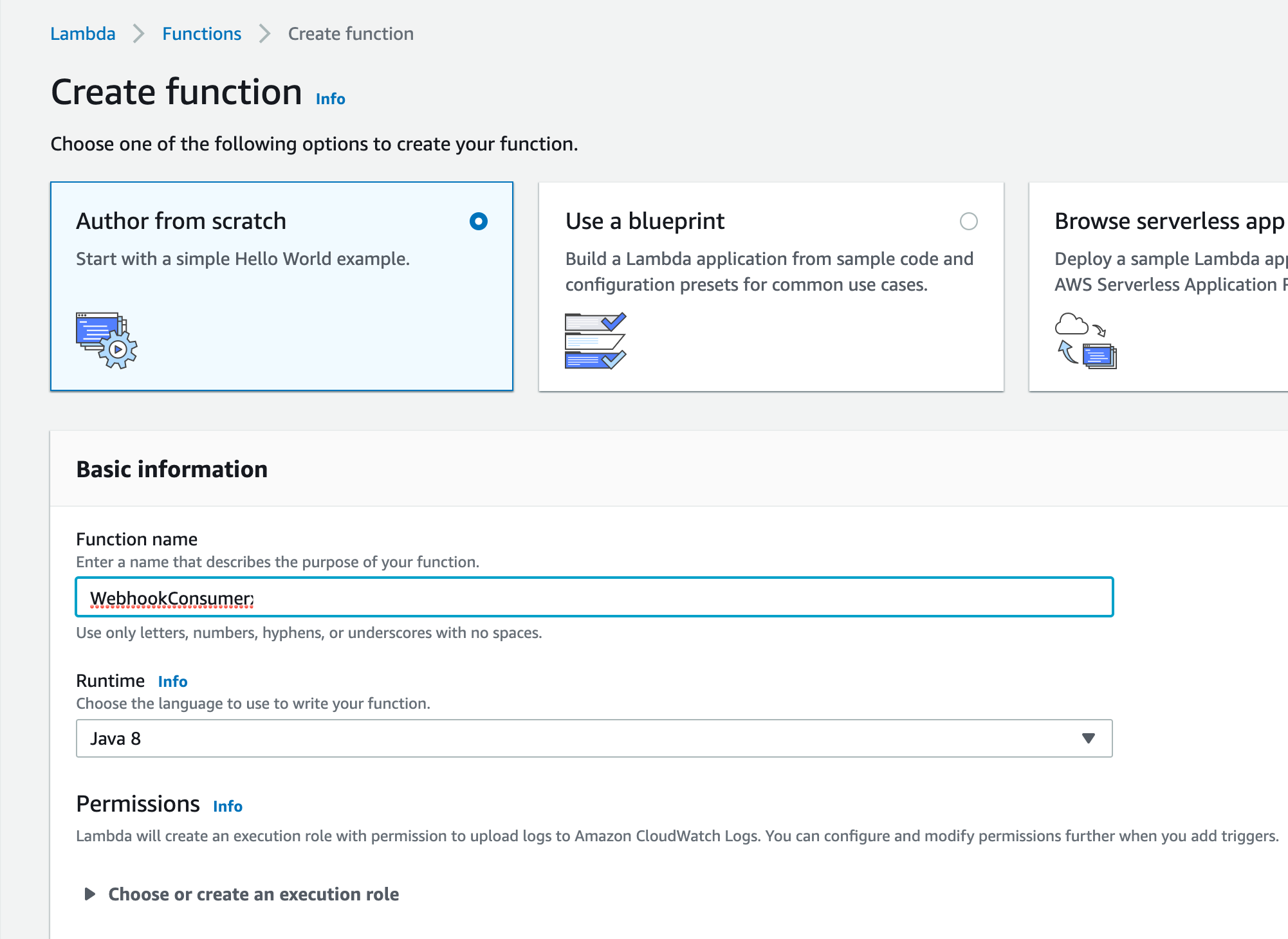

split=> create table impressions (key varchar(500), split varchar(1000), environment varchar(1000), treatment varchar(400), label varchar(500), time varchar(100), splitVersionNumber varchar(100)); - The Second service needed is AWS Lambda, go back to AWS console and select AWS->Lambda, click on Create function button, choose Author from scratch option, use Java 8 for runtime engine.

- Next step is to create the Java code for Lambda service, we will use Eclipse Maven project with the following dependencies:

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-core</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<version>42.2.8</version>

</dependency> - Insert the code below in the class (the class name here is WebhookConsumer.java), make sure to replace the RDS Database connection string and postgres user password. The code below will convert the JSON structure passed from the POST request to Dictionary objects, then loop through each Impression block to insert it into the database.

package splitsupport;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.RequestHandler;

import java.sql.*;

public class WebhookConsumer implements RequestHandler<Object, String> {

@Override

public String handleRequest(Object input, Context context) {

String treatment="";

try {

String url = "jdbc:postgresql://*************.us-east-2.rds.amazonaws.com:5432/split";

Properties props = new Properties();

props.setProperty("user","postgres");

props.setProperty("password","*******");

Connection conn = DriverManager.getConnection(url, props);

Statement st = conn.createStatement();

List<Map<String , String>> impressions = StringToHash(input.toString());

for (Map<String, String> imp1 : impressions) {

// Implement the line below if you need to insert only unique keys per userid, label, treatment and split version

if RecordNotExit(conn, imp1.get("key"), imp1.get("treatment"), imp1.get("split"), imp1.get("label"), imp1.get("environmentName"), imp1.get("splitVersionNumber"))

treatment = imp1.get("treatment");

String SQL = "INSERT INTO impressions(key, split, environment, treatment, time, label, splitVersionNumber) VALUES(?,?,?,?,?,?,?)";

PreparedStatement pstmt = conn.prepareStatement(SQL, Statement.RETURN_GENERATED_KEYS);

pstmt.setString(1, imp1.get("key"));

pstmt.setString(2, imp1.get("split"));

pstmt.setString(3, imp1.get("environmentName"));

pstmt.setString(4, imp1.get("treatment"));

pstmt.setString(5, imp1.get("time"));

pstmt.setString(6, imp1.get("label"));

pstmt.setString(6, imp1.get("splitVersionNumber"));

int affectedRows = pstmt.executeUpdate();

if (affectedRows < 1) {

treatment="Failed";

} else {

treatment="Success";

}

}

}

} catch (Exception e) {

System.out.print("Exception: "+e.getMessage());

return "Exception: "+e.getMessage();

}

return treatment;

}

Public boolean RecordNotExit(Connection conn, String key, String treatment, String split, String label, String environmentName, String splitVersionNumber) {

String SQL = "SELECT * FROM impressions WHERE key='"+key+"' AND split='"+split+"' AND environment='"+environment+"' and treatment='"+treatment+"' and label='"+label+"' and splitVersionNumber='"+splitVersionNumber+"'";

Statement stmt = conn.createStatement();

ResultSet resultSet = statement.executeQuery(SQL);

boolean returnFlag=false;

while (resultSet.next()) {

returnFlag=true;

break;

}

return returnFlag;

}

Public static List<Map<String , String>> StringToHash(String decomp) {

List<Map<String , String>> arrayRecord = new ArrayList<Map<String,String>>();

if (decomp.contains("}, {")) {

for(final String entry : decomp.split("},")) {

Map<String,String> oneRecord = new HashMap<String, String>();

for(final String parts : entry.split(",")) {

final String[] record = parts.split("=");

String recordOne = record[0].replace("[{", "");

recordOne = recordOne.replace("{", "");

String recordTwo = record[1].replace("}]", "");

oneRecord.put(recordOne.trim(), recordTwo.trim());

}

arrayRecord.add(oneRecord);

}

} else {

Map<String,String> oneRecord = new HashMap<String, String>();

for(final String parts : decomp.split(",")) {

final String[] record = parts.split("=");

String recordOne = record[0].replace("[{", "");

String recordTwo = record[1].replace("}]", "");

oneRecord.put(recordOne.trim(), recordTwo.trim());

}

arrayRecord.add(oneRecord);

}

return arrayRecord;

}

}

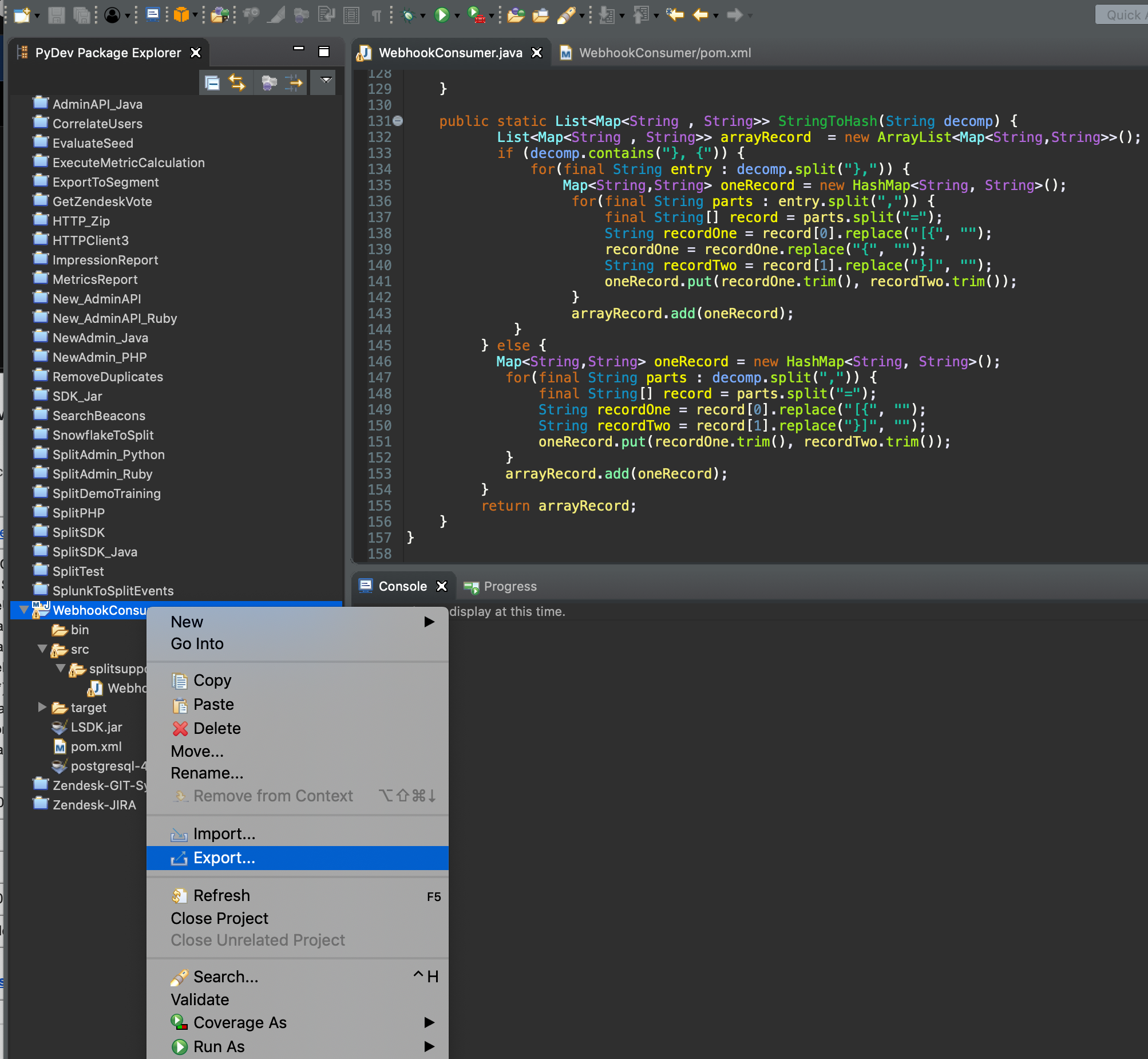

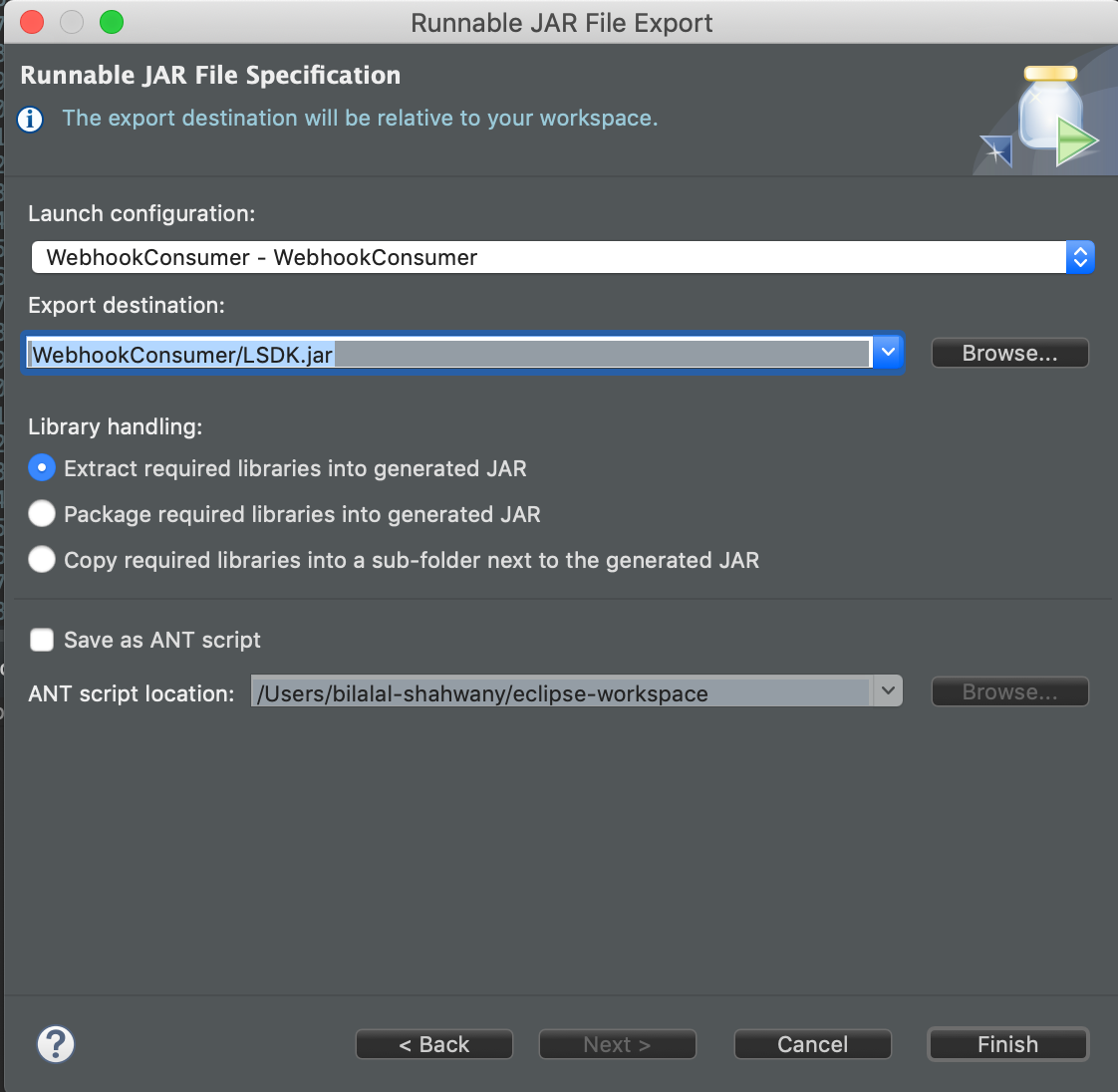

} - Export a self-contained JAR file for your project, in Eclipse, right-click on the project name and select Export.

- Select Runnable JAR File option and click Next, then specify the JAR file name and click Finish.

- Back to AWS Lambda page, under Function code section, update the Handler box with the content below:

splitsupport.WebhookConsumer::handleRequestThen upload the new created JAR file.

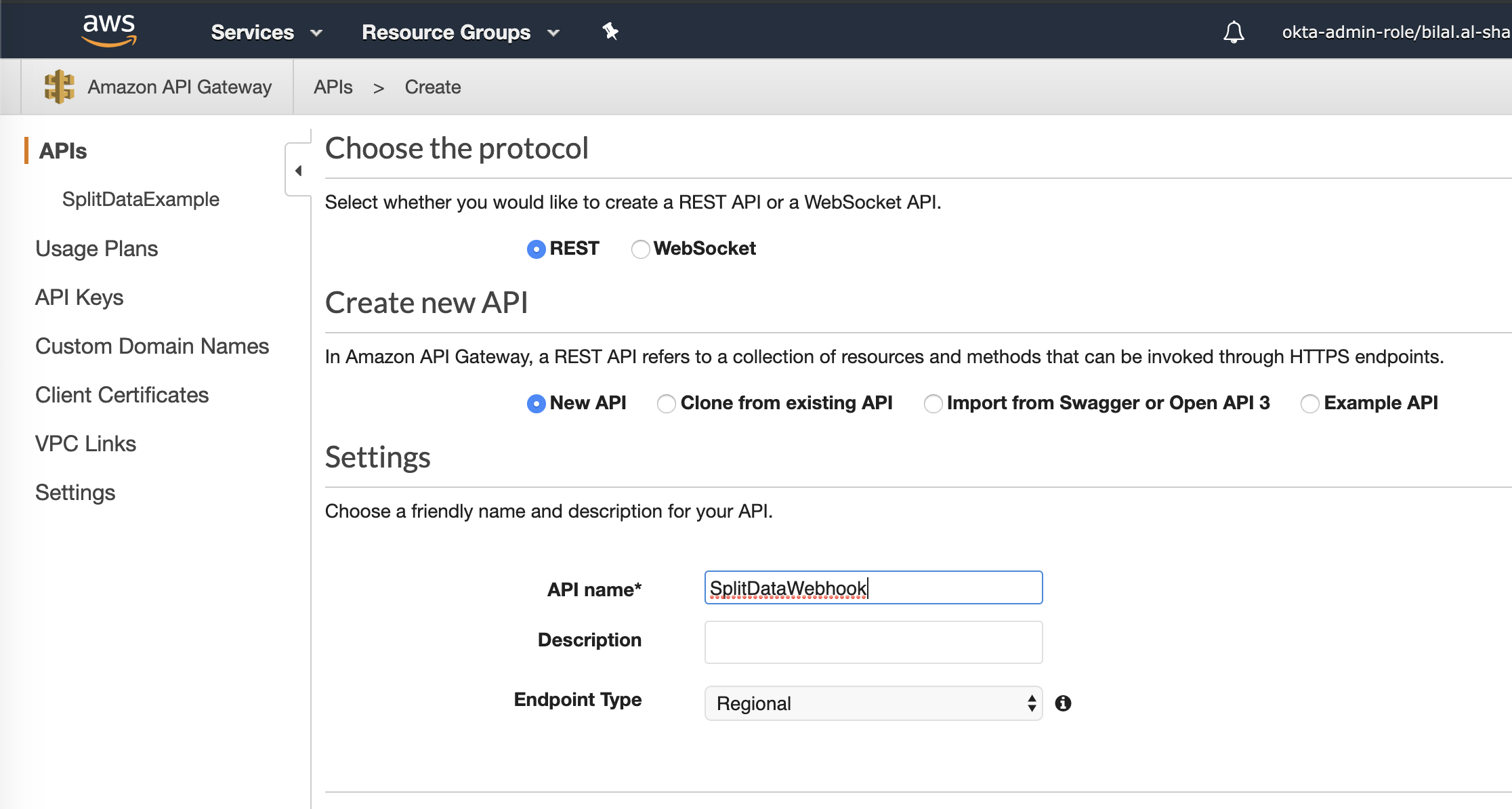

- The Third service needed is the API Gateway, select Services->API Gateway.

- Click on Create API button, and use Rest API and New API options, type a name for your API service and click Create API.

- Click on your new created API Service, click Actions list to add POST Method Execution, select Lambda Function for Integration type and type the Lambda function name created previously.

- Since Split zip the post body when calling the Webhook, we need to enable binary type for our API Gateway, under Settings, add */* in Binary Media Types and click on Save Changes.

- The last step is to create a Stage for your API Gateway, and generate the webhook URL under Stages page, click on Create button.

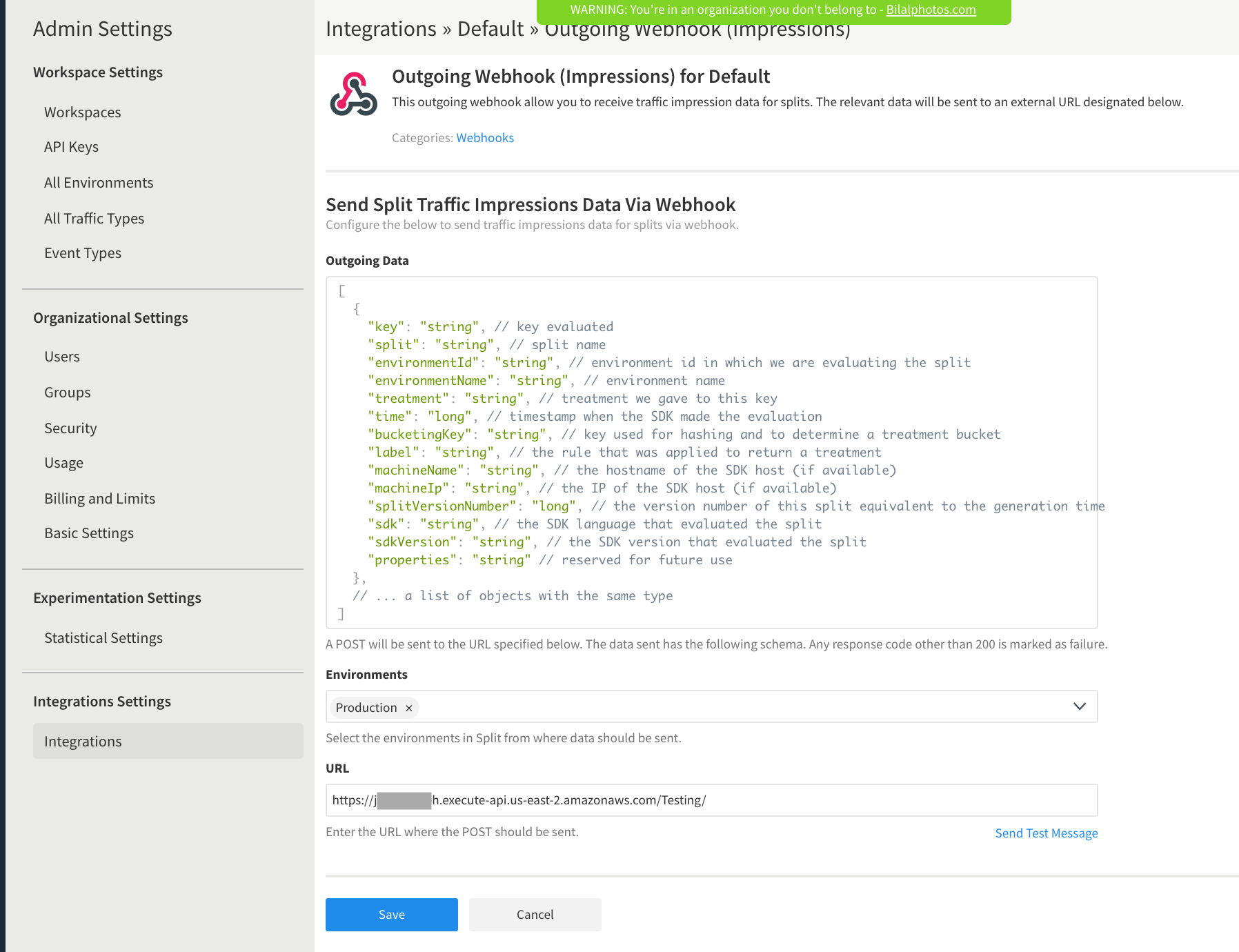

- Your Webhook service is ready now, login to Split user interface, go to Admin Settings page, click on Integrations, and click Add button for Outgoing Webhook (Impressions).

- Select which environment's impressions to export, and paste the API Gateway URL, and click Save.

- The integration is setup now, once new Impressions are generated, they will be inserted into the RDS Database. For testing purposes, make sure couple Impressions are generated in any split in the environment, then from the command line psql console, verify if the impressions are inserted to the database:

split=> select * from impressions ;

key | split | environment | treatment | label | time

----------+----------------+-------------+-----------+--------------+---------------

alphonso | sample_feature | Production | off | default rule | 1572632114350

bob | sample_feature | Production | on | whitelisted | 1572632224436

(2 rows) -

The attached Python framework can be used to extract the data and generate csv file, here is how to use it:

- Install the Postgresql python library from command line.

-

pip install psycopg2

-

- Download the framework classes from this link.

- Update the following files:

- Constants.py - This include the PostgreSQL connection and username/password variables, and generated report and log files path.

- Main.py - This is the main class to run, the variables below can be set to filter out the data needed:

- startDate: The start date in Epoch Time

- endDate: End date in Epoch Time

- environmentName: Set the environment name

- userKey: Set specific user key

- labelName: Set the rule name

- treatment: Set the treatment name

- splitName: Set the Split Name.

- Install the Postgresql python library from command line.

Here is a sample of the generated csv report:

User Key, Split, Environment, Treatment, Rule, Time

alphonso,sample_feature,Production,off,default rule,1572632114350

bob,sample_feature,Production,on,whitelisted,1572632224436

anonymous80008,front_end_packages_anonymous,Production,checked,default rule,1572894348393

anonymous652788,front_end_packages_anonymous,Production,checked,default rule,1572894351426

anonymous484843,front_end_packages_anonymous,Production,unchecked,default rule,1572894350414

anonymous854427,front_end_packages_anonymous,Production,unchecked,default rule,1572894349404

anonymous652788,front_end_discount_coupon_anonymous,Production,high,default rule,1572894351425

anonymous854427,front_end_discount_coupon_anonymous,Production,high,default rule,1572894349403

anonymous484843,front_end_discount_coupon_anonymous,Production,high,default rule,1572894350413

anonymous80008,front_end_discount_coupon_anonymous,Production,low,default rule,1572894348392

anonymous28108,front_end_discount_coupon_anonymous,Production,off,default rule,1572894352430

Comments

0 comments

Please sign in to leave a comment.